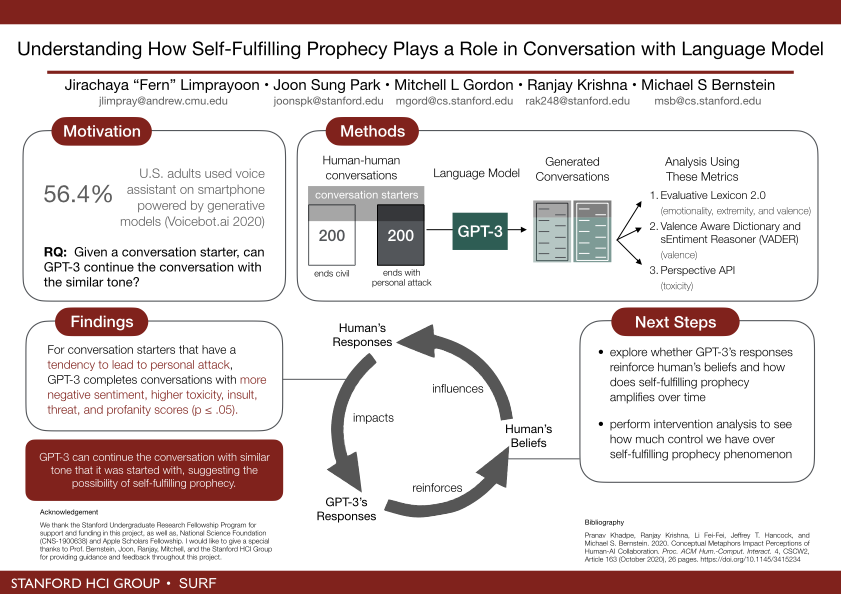

Understanding How Self-Fulfilling Prophecy Plays a Role in Conversation with Language Model

Date:

You are all invited! My talk will take place at 2:15pm, August 12, 2021. If you are planning to come please fill out this google form to acknowledge the recording of the session as well as request any anticipated accommodations as you need.

With the rise of artificial intelligence, chatbots have become more generative, meaning that they are capable of learning from input queries and responding to them on the fly. Generative chatbots are trained on human-human conversations, where self-fulfilling prophecy is proven to play a role. An example of self-fulfilling prophecy might be when you believe that the other party is nice and accepting then go out of your way to start a conversation and act friendly around them. The other party then see you seem to like them and act friendly in response, leading you to think that the other party lives up to their nice reputation. However, the resulting desirable interaction is mostly a result of your initial belief.

Our research focuses on understanding if a language model like GPT-3 can mimic the way humans talk to it and potentially in line with the beliefs humans have about it, which can help us understand if self-fulfilling prophecy phenomenon can happen during such interaction. Understanding it can really help us take control and moderate the human-chatbot interactions to ensure that they abide by specific community guidelines.